Overview

The intent of this post is to cover some fundamentals that will be referenced in future posts. The idea here is to be conversational, rather than technical; I try to avoid using big words. If you want a deeper dive, a good starting point is Ulrich Drepper’s What Every Programmer Should Know About Memory.

topAlignment

topPreferred/Natural Alignment

In C and C++, “integral” types are fundamental types that are built into the language. They are the building blocks that we use to construct, well, pretty much everything: char, short, int, long, pointers, etc. They can be explicitly sized (int32_t, uint8_t, etc.) or implicitly sized (uint_fast32_t.)

Each integral type has a preferred or natural alignment which is equivalent to it’s size in bytes. I say “preferred” because the compiler can generate code for variables that don’t adhere to that, but accessing them can be slower.

One of my favorite interview questions is: What is the sizeof this structure?

struct theStruct {

char a;

int b;

char c;

};The answer to this question is the same as most everything else in life: “It depends.” For brevity, we’re going to assume that there’s nothing special going on and that the size of a char is 1 byte and an int is 4 bytes. There’s a bunch of things that could be going on here, but if I tell you all of them, well, that’d take away my fun!

So what’s the size of the structure? Six bytes? Eight? Nine? Twelve?

topPadding

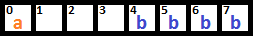

Unless told to do otherwise, the compiler places variables according to their preferred alignment. To do so, it inserts unused bytes called padding. If we have two variables:

uint8_t a;

uint32_t b;Their layout in memory looks like this:

So with that in mind, what’s the size of the structure above? Still six? Eight? Nine? Twelve? Fifty seven thousand and five?

topArrays Of Things

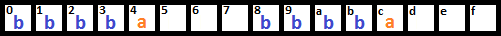

Compilers add padding so an array of items are also aligned. Consider this structure:

struct otherStruct {

uint32_t b;

uint8_t a;

};An array of these structures look like this in memory:

By padding, every element of every structure in an array also falls on its preferred alignment. So now what is the size of our structure? Being stubborn and sticking with six, are we? How about eight? Nine? Twelve? Fifty seven thousand and five?

For fun, what are the sizes of these structures? What do they look like in memory?

struct argh1 {

uint8_t a;

uint32_t b;

uint16_t c;

};

struct argh2 {

uint8_t a;

char * p;

};

struct argh3 {

uint8_t a;

uint8_t b[2];

};Why Does This Matter?

Alignment and padding are incredibly important when dealing with memory. It can affect memory usage, executable size, performance, and stability. By understanding alignment and padding, structures can be rearranged to make them smaller which can lower memory usage, improve cache performance, etc.

Memory layout isn’t just important for memory management. It also applies to networking, file formats, and is core to everything. C and C++ are thin wrappers around memory manipulation; the more comfortable you become understanding and working with memory as a concept, the smaller, more stable, and more efficient your programs will become.

Oh, and the structure size? Twelve.

topTypes Of Memory

topStack

Every process and thread gets a small piece of memory called a stack. The amount available depends on how the program was compiled or the thread was started. We use it when we declare variables, call functions, etc.

Stack management is mostly automagic for the programmer. When a local variable is declared, it is pushed onto the stack. When that variable expires due to scope, the stack space is reclaimed and can be used for something else. Using too much stack space causes a stack overflow, which is effectively a buffer overrun. Put simply, it’s crashy-crash time.

The stack can be explicitly allocated from via alloca. There is no corresponding free call because the memory is popped off of the stack once the calling function exits. Important: alloca memory sticks around until the end of the function, not the end of the current scope, so calling alloca in a loop will quickly exhaust stack space.

Using stack memory for certain things can be faster. Since it’s literally just advancing the stack pointer, the allocation is ridiculously fast. Deallocation doesn’t really exist because that part of the stack is simply reused when the function quits. It’s good for temporary allocations with a known ceiling size. I wouldn’t try to read a file into it, but it’s good for small pieces of data.

topHeap

Heap memory is dynamic memory. Basically, anything that’s not stack, so malloc, new, etc.

Heaps are typically managed from one or more blocks of memory. There are a nearly infinite different ways to manage this memory, from splitting blocks of memory into pieces of varying sizes to chopping it up into fixed sized pieces and then handing them out as needed. The next blog post goes over this in way more detail.

topMemory Architecture

topVirtual Memory

We usually think of memory as being one massive array of contiguous space. Well, that’s an illusion. Instead, OS’s fake it by presenting an address space that appears contiguous, then map physical memory into the address space on demand.

Virtual memory provides address space (a promise) and mapped physical memory (the reality.) Think of it like buying a home. Address space is having a bank note that says you own 123 Cache Lane, even though you’ve never been there. Mapped physical memory is showing up to 123 Cache Lane and going inside.

Virtual memory can be used to do things not available to regular allocations. It allows massive contiguous heaps, “edgeless” ring buffers, debug heaps that guarantee memory isn’t accessed after being freed, and even memory read/write tracking.

Physical memory can also come from many different places, even the hard drive. Virtual memory can be a powerful tool (or a painful problem.)

topUniform and Nonuniform Memory

We normally think of memory as being one massive pool that any thread or core can use at any time. Yep, you guessed it; also an illusion.

Uniform Memory Access (UMA) presents a handy illusion to the programmer that memory is available everywhere for anyone to read and write any time they want. The reality is that for most systems you’ll write code for, memory is cached. You never really touching the memory directly, just copies of it. Behind the scenes, there’s all sorts of machinery keeping it up to date.

With Non Uniform Memory Access (NUMA) each processor gets it’s own block of memory which other processors can’t (easily) access. Memory bandwidth scales better as more cores are added and workloads can be optimized to to take advantage of certain benefits. It can be a huge win, but it’s a difficult problem to plan for. It’s also really, really hard to convince people that it’s good to not randomly access memory that’s scattered everywhere. I mean, it sounds obvious, and yet…

One famous (infamous?) example of NUMA is the PlayStation3’s Cell microprocessor. It had one core, the PPE, that had access to a large amount of memory and several other cores, called SPEs. Each SPE has access to 256KB of memory and anything it wanted to work on had to be pulled from and pushed back to main memory via asynchronous calls. You could think of it like memcpy on a thread, but faster. I absolutely loved it, if that gives you any insight into my sanity.

If you’re interested, there are multiple NUMA resources as well as UMA. This topic is way more complicated and deep than I’m going to go into here.

topPerformance

topCache Misses

In the discussion on UMA above, I mentioned that memory is stored in caches. A cache is simply a copy of recently used (or predicted to be used) memory. Each caching level contains a smaller amount of data than the previous level, but is also faster for the processor to access. When memory is needed, it is pulled from RAM into each level of cache. As more memory is needed, other memory is evicted from the cache to make room. The goal is that rarely or unused data stays in slow but plentiful RAM while data that’s often used stays in the small but fast cache.

A cache miss is simply a delay that occurs when the processor reads or writes data that hasn’t been populated through the various levels of cache.

Because pulling memory from RAM all the way up into registers is slow, processors handle blobs of memory called cache lines. Most current architectures use 64 byte cache lines, so referencing a single byte of data caches 64 bytes rather than just one; it’s just more efficient.

topFalse Sharing

If one core is reading from a cache line that another core is writing to, it creates stalls as the written data has to be flushed to RAM or shared with the other core.

There are legitimate cases where multiple cores can share data. For example, reading the same cache line on two different cores is okay. When a single variable is used to synchronize events, updating the caches causes a stall, but we need it to happen so we can see the changes. Other times, the data just happens to exist in the same cache line and we didn’t actually mean to share anything at all. When this happens, it’s called “false sharing” because the cache lines have to be updated, but we didn’t actually need any of the other data.

This can be fixed by reorganizing classes and structures for locality. Data that is used close in time is also close in distance.

topSkipping The Details

Yeah, I’m skipping a lot of details here. I’m not discussing shared caches, prefetching, lookup, eviction, page faults, or even going into detail about caches. I want you to understand the general idea and dig into details when needed, not bore you with how the MESI protocol works.

topShow Me The Numbers

These numbers should be taken with a huge grain of salt. Tech changes rapidly, so yesterday’s, tomorrow’s, and even today’s cost of things vary wildly. It also depends on architecture, processor, RAM speed, etc. so keep in mind that any numbers I post here are, well, wrong to someone.

So, how fast is it when the CPU accesses memory? Depending on your CPU, there can be three levels of cache: L1, L2, and Last Level Cache (LLC) or L3. If memory isn’t in the caches already, it must be fetched from RAM. If it’s not there (e.g., the OS has paged the memory out to disk,) we’d have to go to a hard drive of some sort, so either an SSD or a physically spinning HDD.

I like to think of memory as eating a cookie. Based on a Steam Hardware Survey results of an average 3.5GHz CPU, data from the Intel 64 and IA-32 Architectures Software Developer Manuals, RAM latency calculations, and various resources comparing performance of SSD vs HDD:

| Memory is in: | Access Time: | The cookie is: |

| Register | 1 cycle | in your hand. |

| L1 | 4 cycles | in the cookie jar. |

| L2 | 12 cycles | in your neighbor’s cookie jar. |

| LLC/L3 | 29 cycles | an Olympic sized swimming pool away. |

| RAM | 38 cycles (+LLC) | a soccer pitch/football field away. |

| SSD | 45,097 cycles | on the moon. |

| HDD | 15.7 million cycles | on the sun. |

These numbers are estimates and highly optimistic. They depend on a bunch of factors; RAM latency, in particular, is sensitive to a host of conditions that will only make it worse. Basically, you’ll never see numbers this good, it’ll only ever be slower.

topSummary

So where does this put us? This post was mostly about how memory is organized, stored and retrieved but didn’t give a lot of detail on managing memory. I mean, that was the whole point of all of this, right?

Well, yeah, of course. But I had to cover some fundamental knowledge so later, when I say things like “we have to explicitly pack the soandso” you’ll understand what I mean and why it needs to be done (assuming you didn’t already know.)

The next post discusses the weapons chest. This is where we really start getting into the tools that can be used to manage memory, from metrics and data gathering to different types of allocators that can be used to hand out memory.

top